How to Assess Your Team's AI Skills in 15 Minutes

Before you spend $50K on AI training, figure out what your people actually need to learn

👋🏽 Hey, it's Anita. Welcome to AI@Scale! My weekly(ish) newsletter on scaling AI capabilities through strategic upskilling and organizational enablement

Read Time: 6 minutes

I recently got a panicked call from a VP who’d just been asked to present an AI readiness assessment to their executive team by the end of the week.

“I’ve been stalling for weeks,” they admitted. “I just need to know what the hell my people actually need to learn.”

They’re not alone. Leaders everywhere are asking the same questions:

How do I know if someone is using AI effectively?

How do I coach my team on AI tools I’ve never used?

How do I even assess what my team needs to learn about AI?

We’re all managing AI adoption while feeling a bit like imposters. We’re making decisions about training and tools without clear insight into what our people actually need. Often, we guess based on vendor pitches, copy what other companies claim, or just hope that a “ChatGPT basics” course will solve everything.

But what if there’s a way to figure out exactly what your team needs to learn—in about 15 minutes per person?

Not a six-month consultant study. Not a 47-question survey nobody completes. Just honest, targeted conversations that give you the answers to get you moving on getting you AI programs moving?

The Problem With Most AI Readiness Assessments

Here’s what every training vendor will tell you:

“Our assessment will tell you exactly what your people need to learn.”

They actually mean:

“We’ll send you a 47-question survey that takes 30 minutes to complete, gets a 12% response rate, and tells you people need to learn ‘the fundamentals.’”

I once watched a company tank about $127K on an AI training program for 500 employees. After the program ended, less than 20% had completed it, and barely anyone had used the tools in their daily work.

Why? Because they never actually figured out what people needed to learn in the first place.

Why Most AI Assessments Fail

Most AI skills assessments ask the wrong questions. They focus on:

What people think they know — not what they’ve actually done

“Comfort levels” rather than uncovering real workflow friction

Enthusiasm instead of readiness to change

What you actually need to understand is:

➡️ What people have genuinely tried with AI (not just what they think they know)

➡️ Where AI could realistically help their current work (not made-up examples)

➡️ What’s actually stopping them from engaging (fear, confusion, or skepticism)

➡️ How ready they are to change how they work (not just learn new tools)

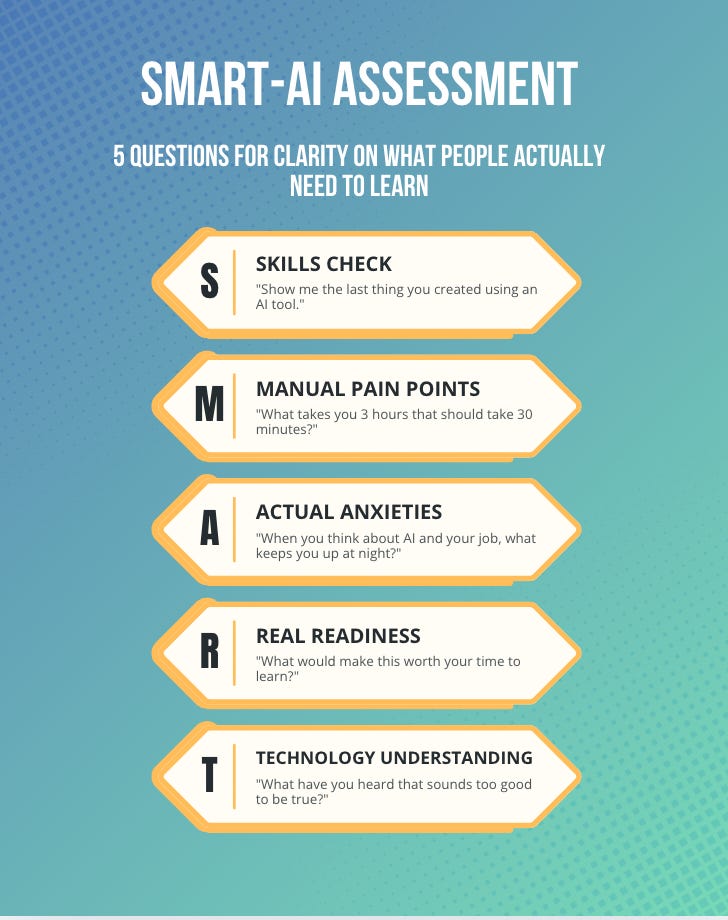

The SMART Framework for AI Readiness

You’ve probably heard of SMART objectives (Specific, Measurable, Achievable, Relevant, Time-bound). As a former educator, I can tell you this framework works great for assessing AI readiness too.

Here’s the SMART-AI framework I’ve used with dozens of teams. It’s not perfect, but it’s fast, honest, and actionable—and most importantly, what you need to start AI adoption.

S — Skills Check

Ask: “Show me the last thing you created using an AI tool.”

Most will say some version of “Claude” “Co-Pilot” “ChatGPT” or “nothing.” All these answers give you a clear starting point. Those who say “ChatGPT” often tried it a few times, got mediocre results, and now only use it for basic tasks (or not at all). This points to a training problem, not a tools problem.

M — Manual Task Pain Points

Ask: “What takes you 3 hours that should take 30 minutes?”

This uncovers real workflow friction where AI could make a difference (not theoretical use cases, but actual time-suck problems that matter.)

A — Actual Anxieties

Ask: “When you think about AI and your job, what keeps you up at night?”

You’ll hear fears about job displacement, feeling left behind, confusion, or skepticism. Training needs to match these anxieties: scared people need reassurance and augmentation examples; skeptics want proof and results; confused folks need step-by-step basics. One-size-fits-all “AI training” doesn’t cut it.

R — Real Readiness

Ask: “What would make this worth your time to learn?”

This reveals barriers often overlooked: lack of time, support, relevance, confidence, or bad past experiences. Unless these are addressed, adoption will stall.

T — Technology Understanding

Ask: “What have you heard about AI that sounds too good to be true?”

This helps you understand what misunderstandings are floating around. Some might think AI is magic that replaces entire jobs; others think it’s just overhyped . Knowing their mindset lets you tailor your coaching.

What This Looks Like In Practice

Here’s an example from a mid-size professional services team I assessed (12 people, total 3 hours):

Skills: Only 3 had used ChatGPT for work; zero tried other AI tools

Manual Tasks: Everyone spent 4+ hours weekly on proposals, project reports, and research documentation

Anxieties: Half feared “AI will replace consultants,” half worried “Clients won’t trust AI-generated work”

Readiness: People would engage if training used their actual client projects and templates

Technology Understanding: Half thought AI could handle entire client engagements; half thought it was just a glorified spell-checker

How We Used This Assessment

Instead of generic “AI literacy training,” we designed hands-on sessions focused on:

Their real proposals and client deliverables

Addressing quality and trust concerns directly

Starting with prompt engineering for research and documentation

The Results

Two months later:

Everyone was using AI daily to address their own bottlenecks

Proposal and deliverable creation time dropped by ~35%

Two team members became internal AI champions, building custom tools to automate tasks

Your SMART-AI Conversation Starter Kit

Use these questions to assess your team’s AI readiness by role:

For Individual Contributors

S: “Show me the last thing you used AI to help with.”

M: “What part of your job feels like busy work?”

A: “What worries you most about AI in your role?”

R: “What would need to change for you to try a new AI tool?”

T: “What have you heard about AI that sounds too good to be true?”

For Managers

S: “Which team members are already experimenting with AI?”

M: “Where does your team spend time on work that feels automatable?”

A: “How does your team talk about AI when you’re not in the room?”

R: “What would your team need to adopt new AI tools?”

T: “What AI capabilities do you think exist that probably don’t?”

For Leadership

S: “What AI capabilities do we actually have in-house right now?”

M: “Where are we wasting time that AI could genuinely help?”

A: “What’s our plan for employees who resist AI adoption?”

R: “How much training and support are we prepared to provide?”

T: “What are we assuming about AI that might not be true?”

What to Do With Your Assessment Results

Stop overthinking it!

This week, pick three people representing different attitudes toward AI. Spend 15 minutes with each using the SMART questions. You should start seeing patterns that other assessments miss.

The goal isn’t perfection—it’s clarity. Once you know what you’re actually working with, every other decision gets easier (how to approach training, timelines, budgets, etc.)

Try it and let me know what you discover. I’m genuinely curious what patterns show up in your organization.

Your assessment results will point you toward one of these starting strategies: